diff options

| author | Roman Lebedev <lebedev.ri@gmail.com> | 2018-05-29 13:13:28 +0300 |

|---|---|---|

| committer | Dominic Hamon <dominichamon@users.noreply.github.com> | 2018-05-29 11:13:28 +0100 |

| commit | a6a1b0d765b116bb9c777d45a299ae84a2760981 (patch) | |

| tree | 1e11e6dec73c7354d4b05ba27ea76685658507e1 | |

| parent | ec0f69c28e412ec1fb1e8d170ada4faeebdc8293 (diff) | |

| download | google-benchmark-a6a1b0d765b116bb9c777d45a299ae84a2760981.tar.gz | |

Benchmarking is hard. Making sense of the benchmarking results is even harder. (#593)

The first problem you have to solve yourself. The second one can be aided.

The benchmark library can compute some statistics over the repetitions,

which helps with grasping the results somewhat.

But that is only for the one set of results. It does not really help to compare

the two benchmark results, which is the interesting bit. Thankfully, there are

these bundled `tools/compare.py` and `tools/compare_bench.py` scripts.

They can provide a diff between two benchmarking results. Yay!

Except not really, it's just a diff, while it is very informative and better than

nothing, it does not really help answer The Question - am i just looking at the noise?

It's like not having these per-benchmark statistics...

Roughly, we can formulate the question as:

> Are these two benchmarks the same?

> Did my change actually change anything, or is the difference below the noise level?

Well, this really sounds like a [null hypothesis](https://en.wikipedia.org/wiki/Null_hypothesis), does it not?

So maybe we can use statistics here, and solve all our problems?

lol, no, it won't solve all the problems. But maybe it will act as a tool,

to better understand the output, just like the usual statistics on the repetitions...

I'm making an assumption here that most of the people care about the change

of average value, not the standard deviation. Thus i believe we can use T-Test,

be it either [Student's t-test](https://en.wikipedia.org/wiki/Student%27s_t-test), or [Welch's t-test](https://en.wikipedia.org/wiki/Welch%27s_t-test).

**EDIT**: however, after @dominichamon review, it was decided that it is better

to use more robust [Mann–Whitney U test](https://en.wikipedia.org/wiki/Mann–Whitney_U_test)

I'm using [scipy.stats.mannwhitneyu](https://docs.scipy.org/doc/scipy/reference/generated/scipy.stats.mannwhitneyu.html#scipy.stats.mannwhitneyu).

There are two new user-facing knobs:

```

$ ./compare.py --help

usage: compare.py [-h] [-u] [--alpha UTEST_ALPHA]

{benchmarks,filters,benchmarksfiltered} ...

versatile benchmark output compare tool

<...>

optional arguments:

-h, --help show this help message and exit

-u, --utest Do a two-tailed Mann-Whitney U test with the null

hypothesis that it is equally likely that a randomly

selected value from one sample will be less than or

greater than a randomly selected value from a second

sample. WARNING: requires **LARGE** (9 or more)

number of repetitions to be meaningful!

--alpha UTEST_ALPHA significance level alpha. if the calculated p-value is

below this value, then the result is said to be

statistically significant and the null hypothesis is

rejected. (default: 0.0500)

```

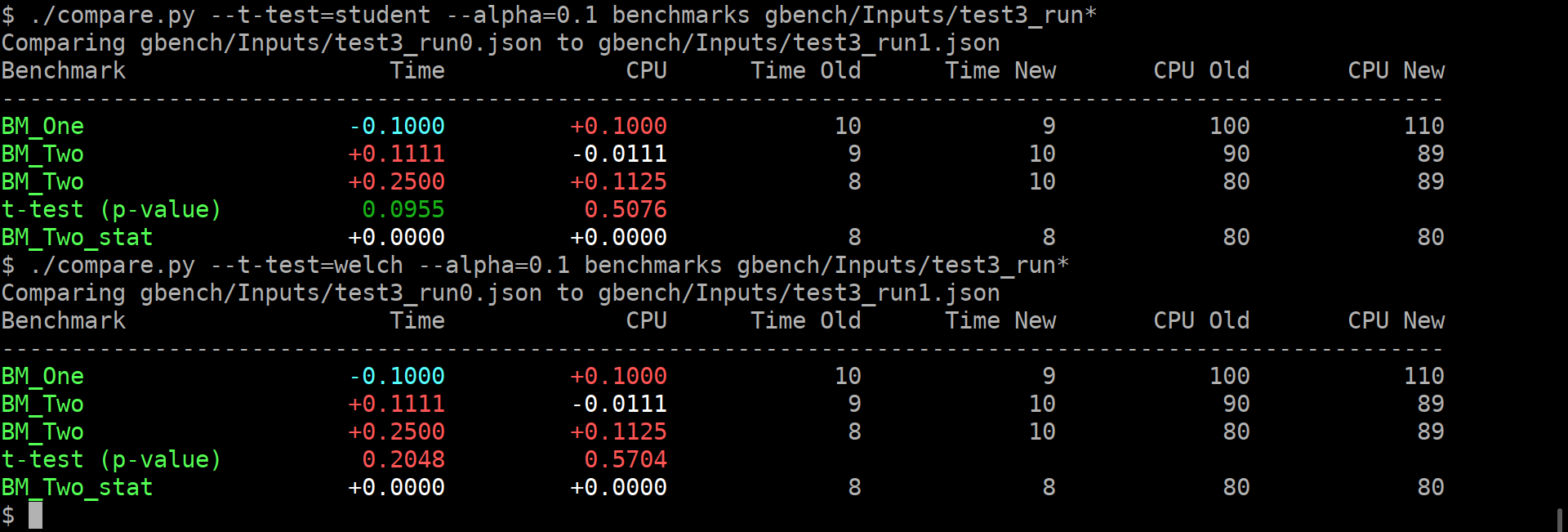

Example output:

As you can guess, the alpha does affect anything but the coloring of the computed p-values.

If it is green, then the change in the average values is statistically-significant.

I'm detecting the repetitions by matching name. This way, no changes to the json are _needed_.

Caveats:

* This won't work if the json is not in the same order as outputted by the benchmark,

or if the parsing does not retain the ordering.

* This won't work if after the grouped repetitions there isn't at least one row with

different name (e.g. statistic). Since there isn't a knob to disable printing of statistics

(only the other way around), i'm not too worried about this.

* **The results will be wrong if the repetition count is different between the two benchmarks being compared.**

* Even though i have added (hopefully full) test coverage, the code of these python tools is staring

to look a bit jumbled.

* So far i have added this only to the `tools/compare.py`.

Should i add it to `tools/compare_bench.py` too?

Or should we deduplicate them (by removing the latter one)?

| -rwxr-xr-x | tools/compare.py | 62 | ||||

| -rw-r--r-- | tools/gbench/Inputs/test3_run0.json | 39 | ||||

| -rw-r--r-- | tools/gbench/Inputs/test3_run1.json | 39 | ||||

| -rw-r--r-- | tools/gbench/report.py | 150 |

4 files changed, 270 insertions, 20 deletions

diff --git a/tools/compare.py b/tools/compare.py index f0a4455..f293306 100755 --- a/tools/compare.py +++ b/tools/compare.py @@ -35,6 +35,22 @@ def check_inputs(in1, in2, flags): def create_parser(): parser = ArgumentParser( description='versatile benchmark output compare tool') + + utest = parser.add_argument_group() + utest.add_argument( + '-u', + '--utest', + action="store_true", + help="Do a two-tailed Mann-Whitney U test with the null hypothesis that it is equally likely that a randomly selected value from one sample will be less than or greater than a randomly selected value from a second sample.\nWARNING: requires **LARGE** (no less than 9) number of repetitions to be meaningful!") + alpha_default = 0.05 + utest.add_argument( + "--alpha", + dest='utest_alpha', + default=alpha_default, + type=float, + help=("significance level alpha. if the calculated p-value is below this value, then the result is said to be statistically significant and the null hypothesis is rejected.\n(default: %0.4f)") % + alpha_default) + subparsers = parser.add_subparsers( help='This tool has multiple modes of operation:', dest='mode') @@ -139,8 +155,8 @@ def main(): parser = create_parser() args, unknown_args = parser.parse_known_args() if args.mode is None: - parser.print_help() - exit(1) + parser.print_help() + exit(1) assert not unknown_args benchmark_options = args.benchmark_options @@ -205,7 +221,8 @@ def main(): json2_orig, filter_contender, replacement) # Diff and output - output_lines = gbench.report.generate_difference_report(json1, json2) + output_lines = gbench.report.generate_difference_report( + json1, json2, args.utest, args.utest_alpha) print(description) for ln in output_lines: print(ln) @@ -228,6 +245,37 @@ class TestParser(unittest.TestCase): def test_benchmarks_basic(self): parsed = self.parser.parse_args( ['benchmarks', self.testInput0, self.testInput1]) + self.assertFalse(parsed.utest) + self.assertEqual(parsed.mode, 'benchmarks') + self.assertEqual(parsed.test_baseline[0].name, self.testInput0) + self.assertEqual(parsed.test_contender[0].name, self.testInput1) + self.assertFalse(parsed.benchmark_options) + + def test_benchmarks_basic_with_utest(self): + parsed = self.parser.parse_args( + ['-u', 'benchmarks', self.testInput0, self.testInput1]) + self.assertTrue(parsed.utest) + self.assertEqual(parsed.utest_alpha, 0.05) + self.assertEqual(parsed.mode, 'benchmarks') + self.assertEqual(parsed.test_baseline[0].name, self.testInput0) + self.assertEqual(parsed.test_contender[0].name, self.testInput1) + self.assertFalse(parsed.benchmark_options) + + def test_benchmarks_basic_with_utest(self): + parsed = self.parser.parse_args( + ['--utest', 'benchmarks', self.testInput0, self.testInput1]) + self.assertTrue(parsed.utest) + self.assertEqual(parsed.utest_alpha, 0.05) + self.assertEqual(parsed.mode, 'benchmarks') + self.assertEqual(parsed.test_baseline[0].name, self.testInput0) + self.assertEqual(parsed.test_contender[0].name, self.testInput1) + self.assertFalse(parsed.benchmark_options) + + def test_benchmarks_basic_with_utest_alpha(self): + parsed = self.parser.parse_args( + ['--utest', '--alpha=0.314', 'benchmarks', self.testInput0, self.testInput1]) + self.assertTrue(parsed.utest) + self.assertEqual(parsed.utest_alpha, 0.314) self.assertEqual(parsed.mode, 'benchmarks') self.assertEqual(parsed.test_baseline[0].name, self.testInput0) self.assertEqual(parsed.test_contender[0].name, self.testInput1) @@ -236,6 +284,7 @@ class TestParser(unittest.TestCase): def test_benchmarks_with_remainder(self): parsed = self.parser.parse_args( ['benchmarks', self.testInput0, self.testInput1, 'd']) + self.assertFalse(parsed.utest) self.assertEqual(parsed.mode, 'benchmarks') self.assertEqual(parsed.test_baseline[0].name, self.testInput0) self.assertEqual(parsed.test_contender[0].name, self.testInput1) @@ -244,6 +293,7 @@ class TestParser(unittest.TestCase): def test_benchmarks_with_remainder_after_doubleminus(self): parsed = self.parser.parse_args( ['benchmarks', self.testInput0, self.testInput1, '--', 'e']) + self.assertFalse(parsed.utest) self.assertEqual(parsed.mode, 'benchmarks') self.assertEqual(parsed.test_baseline[0].name, self.testInput0) self.assertEqual(parsed.test_contender[0].name, self.testInput1) @@ -252,6 +302,7 @@ class TestParser(unittest.TestCase): def test_filters_basic(self): parsed = self.parser.parse_args( ['filters', self.testInput0, 'c', 'd']) + self.assertFalse(parsed.utest) self.assertEqual(parsed.mode, 'filters') self.assertEqual(parsed.test[0].name, self.testInput0) self.assertEqual(parsed.filter_baseline[0], 'c') @@ -261,6 +312,7 @@ class TestParser(unittest.TestCase): def test_filters_with_remainder(self): parsed = self.parser.parse_args( ['filters', self.testInput0, 'c', 'd', 'e']) + self.assertFalse(parsed.utest) self.assertEqual(parsed.mode, 'filters') self.assertEqual(parsed.test[0].name, self.testInput0) self.assertEqual(parsed.filter_baseline[0], 'c') @@ -270,6 +322,7 @@ class TestParser(unittest.TestCase): def test_filters_with_remainder_after_doubleminus(self): parsed = self.parser.parse_args( ['filters', self.testInput0, 'c', 'd', '--', 'f']) + self.assertFalse(parsed.utest) self.assertEqual(parsed.mode, 'filters') self.assertEqual(parsed.test[0].name, self.testInput0) self.assertEqual(parsed.filter_baseline[0], 'c') @@ -279,6 +332,7 @@ class TestParser(unittest.TestCase): def test_benchmarksfiltered_basic(self): parsed = self.parser.parse_args( ['benchmarksfiltered', self.testInput0, 'c', self.testInput1, 'e']) + self.assertFalse(parsed.utest) self.assertEqual(parsed.mode, 'benchmarksfiltered') self.assertEqual(parsed.test_baseline[0].name, self.testInput0) self.assertEqual(parsed.filter_baseline[0], 'c') @@ -289,6 +343,7 @@ class TestParser(unittest.TestCase): def test_benchmarksfiltered_with_remainder(self): parsed = self.parser.parse_args( ['benchmarksfiltered', self.testInput0, 'c', self.testInput1, 'e', 'f']) + self.assertFalse(parsed.utest) self.assertEqual(parsed.mode, 'benchmarksfiltered') self.assertEqual(parsed.test_baseline[0].name, self.testInput0) self.assertEqual(parsed.filter_baseline[0], 'c') @@ -299,6 +354,7 @@ class TestParser(unittest.TestCase): def test_benchmarksfiltered_with_remainder_after_doubleminus(self): parsed = self.parser.parse_args( ['benchmarksfiltered', self.testInput0, 'c', self.testInput1, 'e', '--', 'g']) + self.assertFalse(parsed.utest) self.assertEqual(parsed.mode, 'benchmarksfiltered') self.assertEqual(parsed.test_baseline[0].name, self.testInput0) self.assertEqual(parsed.filter_baseline[0], 'c') diff --git a/tools/gbench/Inputs/test3_run0.json b/tools/gbench/Inputs/test3_run0.json new file mode 100644 index 0000000..c777bb0 --- /dev/null +++ b/tools/gbench/Inputs/test3_run0.json @@ -0,0 +1,39 @@ +{ + "context": { + "date": "2016-08-02 17:44:46", + "num_cpus": 4, + "mhz_per_cpu": 4228, + "cpu_scaling_enabled": false, + "library_build_type": "release" + }, + "benchmarks": [ + { + "name": "BM_One", + "iterations": 1000, + "real_time": 10, + "cpu_time": 100, + "time_unit": "ns" + }, + { + "name": "BM_Two", + "iterations": 1000, + "real_time": 9, + "cpu_time": 90, + "time_unit": "ns" + }, + { + "name": "BM_Two", + "iterations": 1000, + "real_time": 8, + "cpu_time": 80, + "time_unit": "ns" + }, + { + "name": "BM_Two_stat", + "iterations": 1000, + "real_time": 8, + "cpu_time": 80, + "time_unit": "ns" + } + ] +} diff --git a/tools/gbench/Inputs/test3_run1.json b/tools/gbench/Inputs/test3_run1.json new file mode 100644 index 0000000..0350333 --- /dev/null +++ b/tools/gbench/Inputs/test3_run1.json @@ -0,0 +1,39 @@ +{ + "context": { + "date": "2016-08-02 17:44:46", + "num_cpus": 4, + "mhz_per_cpu": 4228, + "cpu_scaling_enabled": false, + "library_build_type": "release" + }, + "benchmarks": [ + { + "name": "BM_One", + "iterations": 1000, + "real_time": 9, + "cpu_time": 110, + "time_unit": "ns" + }, + { + "name": "BM_Two", + "iterations": 1000, + "real_time": 10, + "cpu_time": 89, + "time_unit": "ns" + }, + { + "name": "BM_Two", + "iterations": 1000, + "real_time": 7, + "cpu_time": 70, + "time_unit": "ns" + }, + { + "name": "BM_Two_stat", + "iterations": 1000, + "real_time": 8, + "cpu_time": 80, + "time_unit": "ns" + } + ] +} diff --git a/tools/gbench/report.py b/tools/gbench/report.py index 0c09098..4cdd3b7 100644 --- a/tools/gbench/report.py +++ b/tools/gbench/report.py @@ -4,6 +4,9 @@ import os import re import copy +from scipy.stats import mannwhitneyu + + class BenchmarkColor(object): def __init__(self, name, code): self.name = name @@ -16,11 +19,13 @@ class BenchmarkColor(object): def __format__(self, format): return self.code + # Benchmark Colors Enumeration BC_NONE = BenchmarkColor('NONE', '') BC_MAGENTA = BenchmarkColor('MAGENTA', '\033[95m') BC_CYAN = BenchmarkColor('CYAN', '\033[96m') BC_OKBLUE = BenchmarkColor('OKBLUE', '\033[94m') +BC_OKGREEN = BenchmarkColor('OKGREEN', '\033[32m') BC_HEADER = BenchmarkColor('HEADER', '\033[92m') BC_WARNING = BenchmarkColor('WARNING', '\033[93m') BC_WHITE = BenchmarkColor('WHITE', '\033[97m') @@ -29,6 +34,7 @@ BC_ENDC = BenchmarkColor('ENDC', '\033[0m') BC_BOLD = BenchmarkColor('BOLD', '\033[1m') BC_UNDERLINE = BenchmarkColor('UNDERLINE', '\033[4m') + def color_format(use_color, fmt_str, *args, **kwargs): """ Return the result of 'fmt_str.format(*args, **kwargs)' after transforming @@ -78,30 +84,82 @@ def filter_benchmark(json_orig, family, replacement=""): for be in json_orig['benchmarks']: if not regex.search(be['name']): continue - filteredbench = copy.deepcopy(be) # Do NOT modify the old name! + filteredbench = copy.deepcopy(be) # Do NOT modify the old name! filteredbench['name'] = regex.sub(replacement, filteredbench['name']) filtered['benchmarks'].append(filteredbench) return filtered -def generate_difference_report(json1, json2, use_color=True): +def generate_difference_report( + json1, + json2, + utest=False, + utest_alpha=0.05, + use_color=True): """ Calculate and report the difference between each test of two benchmarks runs specified as 'json1' and 'json2'. """ + assert utest is True or utest is False first_col_width = find_longest_name(json1['benchmarks']) + def find_test(name): for b in json2['benchmarks']: if b['name'] == name: return b return None - first_col_width = max(first_col_width, len('Benchmark')) + + utest_col_name = "U-test (p-value)" + first_col_width = max( + first_col_width, + len('Benchmark'), + len(utest_col_name)) first_line = "{:<{}s}Time CPU Time Old Time New CPU Old CPU New".format( 'Benchmark', 12 + first_col_width) output_strs = [first_line, '-' * len(first_line)] - gen = (bn for bn in json1['benchmarks'] if 'real_time' in bn and 'cpu_time' in bn) + last_name = None + timings_time = [[], []] + timings_cpu = [[], []] + + gen = (bn for bn in json1['benchmarks'] + if 'real_time' in bn and 'cpu_time' in bn) for bn in gen: + fmt_str = "{}{:<{}s}{endc}{}{:+16.4f}{endc}{}{:+16.4f}{endc}{:14.0f}{:14.0f}{endc}{:14.0f}{:14.0f}" + special_str = "{}{:<{}s}{endc}{}{:16.4f}{endc}{}{:16.4f}" + + if last_name is None: + last_name = bn['name'] + if last_name != bn['name']: + MIN_REPETITIONS = 2 + if ((len(timings_time[0]) >= MIN_REPETITIONS) and + (len(timings_time[1]) >= MIN_REPETITIONS) and + (len(timings_cpu[0]) >= MIN_REPETITIONS) and + (len(timings_cpu[1]) >= MIN_REPETITIONS)): + if utest: + def get_utest_color(pval): + if pval >= utest_alpha: + return BC_FAIL + else: + return BC_OKGREEN + time_pvalue = mannwhitneyu( + timings_time[0], timings_time[1], alternative='two-sided').pvalue + cpu_pvalue = mannwhitneyu( + timings_cpu[0], timings_cpu[1], alternative='two-sided').pvalue + output_strs += [color_format(use_color, + special_str, + BC_HEADER, + utest_col_name, + first_col_width, + get_utest_color(time_pvalue), + time_pvalue, + get_utest_color(cpu_pvalue), + cpu_pvalue, + endc=BC_ENDC)] + last_name = bn['name'] + timings_time = [[], []] + timings_cpu = [[], []] + other_bench = find_test(bn['name']) if not other_bench: continue @@ -116,26 +174,44 @@ def generate_difference_report(json1, json2, use_color=True): return BC_WHITE else: return BC_CYAN - fmt_str = "{}{:<{}s}{endc}{}{:+16.4f}{endc}{}{:+16.4f}{endc}{:14.0f}{:14.0f}{endc}{:14.0f}{:14.0f}" - tres = calculate_change(bn['real_time'], other_bench['real_time']) - cpures = calculate_change(bn['cpu_time'], other_bench['cpu_time']) - output_strs += [color_format(use_color, fmt_str, - BC_HEADER, bn['name'], first_col_width, - get_color(tres), tres, get_color(cpures), cpures, - bn['real_time'], other_bench['real_time'], - bn['cpu_time'], other_bench['cpu_time'], - endc=BC_ENDC)] + + timings_time[0].append(bn['real_time']) + timings_time[1].append(other_bench['real_time']) + timings_cpu[0].append(bn['cpu_time']) + timings_cpu[1].append(other_bench['cpu_time']) + + tres = calculate_change(timings_time[0][-1], timings_time[1][-1]) + cpures = calculate_change(timings_cpu[0][-1], timings_cpu[1][-1]) + output_strs += [color_format(use_color, + fmt_str, + BC_HEADER, + bn['name'], + first_col_width, + get_color(tres), + tres, + get_color(cpures), + cpures, + timings_time[0][-1], + timings_time[1][-1], + timings_cpu[0][-1], + timings_cpu[1][-1], + endc=BC_ENDC)] return output_strs ############################################################################### # Unit tests + import unittest + class TestReportDifference(unittest.TestCase): def load_results(self): import json - testInputs = os.path.join(os.path.dirname(os.path.realpath(__file__)), 'Inputs') + testInputs = os.path.join( + os.path.dirname( + os.path.realpath(__file__)), + 'Inputs') testOutput1 = os.path.join(testInputs, 'test1_run1.json') testOutput2 = os.path.join(testInputs, 'test1_run2.json') with open(testOutput1, 'r') as f: @@ -160,7 +236,8 @@ class TestReportDifference(unittest.TestCase): ['BM_BadTimeUnit', '-0.9000', '+0.2000', '0', '0', '0', '1'], ] json1, json2 = self.load_results() - output_lines_with_header = generate_difference_report(json1, json2, use_color=False) + output_lines_with_header = generate_difference_report( + json1, json2, use_color=False) output_lines = output_lines_with_header[2:] print("\n".join(output_lines_with_header)) self.assertEqual(len(output_lines), len(expect_lines)) @@ -173,7 +250,10 @@ class TestReportDifference(unittest.TestCase): class TestReportDifferenceBetweenFamilies(unittest.TestCase): def load_result(self): import json - testInputs = os.path.join(os.path.dirname(os.path.realpath(__file__)), 'Inputs') + testInputs = os.path.join( + os.path.dirname( + os.path.realpath(__file__)), + 'Inputs') testOutput = os.path.join(testInputs, 'test2_run.json') with open(testOutput, 'r') as f: json = json.load(f) @@ -189,7 +269,8 @@ class TestReportDifferenceBetweenFamilies(unittest.TestCase): json = self.load_result() json1 = filter_benchmark(json, "BM_Z.ro", ".") json2 = filter_benchmark(json, "BM_O.e", ".") - output_lines_with_header = generate_difference_report(json1, json2, use_color=False) + output_lines_with_header = generate_difference_report( + json1, json2, use_color=False) output_lines = output_lines_with_header[2:] print("\n") print("\n".join(output_lines_with_header)) @@ -200,6 +281,41 @@ class TestReportDifferenceBetweenFamilies(unittest.TestCase): self.assertEqual(parts, expect_lines[i]) +class TestReportDifferenceWithUTest(unittest.TestCase): + def load_results(self): + import json + testInputs = os.path.join( + os.path.dirname( + os.path.realpath(__file__)), + 'Inputs') + testOutput1 = os.path.join(testInputs, 'test3_run0.json') + testOutput2 = os.path.join(testInputs, 'test3_run1.json') + with open(testOutput1, 'r') as f: + json1 = json.load(f) + with open(testOutput2, 'r') as f: + json2 = json.load(f) + return json1, json2 + + def test_utest(self): + expect_lines = [] + expect_lines = [ + ['BM_One', '-0.1000', '+0.1000', '10', '9', '100', '110'], + ['BM_Two', '+0.1111', '-0.0111', '9', '10', '90', '89'], + ['BM_Two', '+0.2500', '+0.1125', '8', '10', '80', '89'], + ['U-test', '(p-value)', '0.2207', '0.6831'], + ['BM_Two_stat', '+0.0000', '+0.0000', '8', '8', '80', '80'], + ] + json1, json2 = self.load_results() + output_lines_with_header = generate_difference_report( + json1, json2, True, 0.05, use_color=False) + output_lines = output_lines_with_header[2:] + print("\n".join(output_lines_with_header)) + self.assertEqual(len(output_lines), len(expect_lines)) + for i in range(0, len(output_lines)): + parts = [x for x in output_lines[i].split(' ') if x] + self.assertEqual(parts, expect_lines[i]) + + if __name__ == '__main__': unittest.main() |